1. What is Data Anonymization?

Data anonymization is the process of protecting private or sensitive information by erasing or encrypting identifiers that connect an individual to stored data. The goal is to allow data to be used for analysis and research while ensuring individual privacy. Techniques such as pseudonymization, generalization, and randomization are employed to achieve this, but they often come with significant drawbacks.

2. Legacy Data Anonymization Techniques:

a. Data Masking:

Data masking involves replacing original data with fictitious yet plausible data. For instance, replacing real names with random names while keeping the format and structure of the data intact. This technique is widely used in software testing and user training environments to protect sensitive information without compromising the dataset's usability.

b. Pseudonymization:

Pseudonymization replaces identifiable information with pseudonyms or aliases. While this helps preserve the data structure, it does not fully anonymize the data, as re-identification is possible if the pseudonyms can be reverse-engineered. This method is commonly used in medical research and employee record management where maintaining consistent data structure is crucial.

c. Generalization:

Generalization reduces the granularity of data to prevent identification. For example, specific ages can be generalized into age ranges (e.g., "30-40" instead of "35"). This technique is used in demographic studies and market research, but it often leads to a loss of data utility, making detailed analysis difficult.

d. Randomization:

Randomization adds random noise to data values to obscure their true nature. For example, birthdates might be shifted by a random number of days. While straightforward to implement, randomization can degrade the data's usefulness for analysis, especially if not carefully managed.

e. Data Perturbation:

Data perturbation modifies data slightly by adding random noise or rounding values. This technique aims to maintain the overall statistical properties of the dataset while protecting individual records. However, it can still be vulnerable to certain types of attacks and may distort the data.

f. Data Redaction:

Data redaction involves completely removing or obscuring sensitive data values. For example, redacting specific names or identifiers from a document. This ensures privacy by eliminating sensitive information but can significantly reduce data utility and lead to gaps in the data.

g. Data Swapping:

Data swapping involves swapping values within the dataset, such as swapping birth dates or addresses among different records. This method can maintain data utility but might still pose a risk of re-identification if the swaps are not random enough.

h. K-anonymity:

K-anonymity ensures that each record is indistinguishable from at least k-1 other records based on certain attributes. This reduces the risk of re-identification by grouping similar records. However, it can be vulnerable to homogeneity and background knowledge attacks.

3. What Do Data Scientists Need from Data in Modern Times?

Data scientists today require high-quality, detailed data for advanced analytics, machine learning, and AI applications. The data needs to retain its utility and accuracy to derive meaningful insights. Additionally, it must comply with stringent data privacy regulations, such as GDPR and CCPA, which mandate robust anonymization methods to protect individual privacy.

Unfortunately, legacy data anonymization techniques are not enough anymore. The increasing demand for high quantities of data to train ML models in the pursuit of developing artificial intelligence has led to some major challenges for anonymized data. For one, data anonymization has an inverse relation with data utility. Therefore the more we suppress, encrypt, destroy, or mask data, the more we lose data utility. This places organizations with a hard trade-off where both options have equally negative consequences.

Furthermore, as technology advances, legacy data anonymization techniques are finding it hard to keep up with advanced hacking methods. This exposes organizations to intense scrutiny and lawsuits up to millions of dollars. Making data anonymization a lose-lose scenario in both data utility and data protection.

4. Why Legacy Data Anonymization Techniques are Failing:

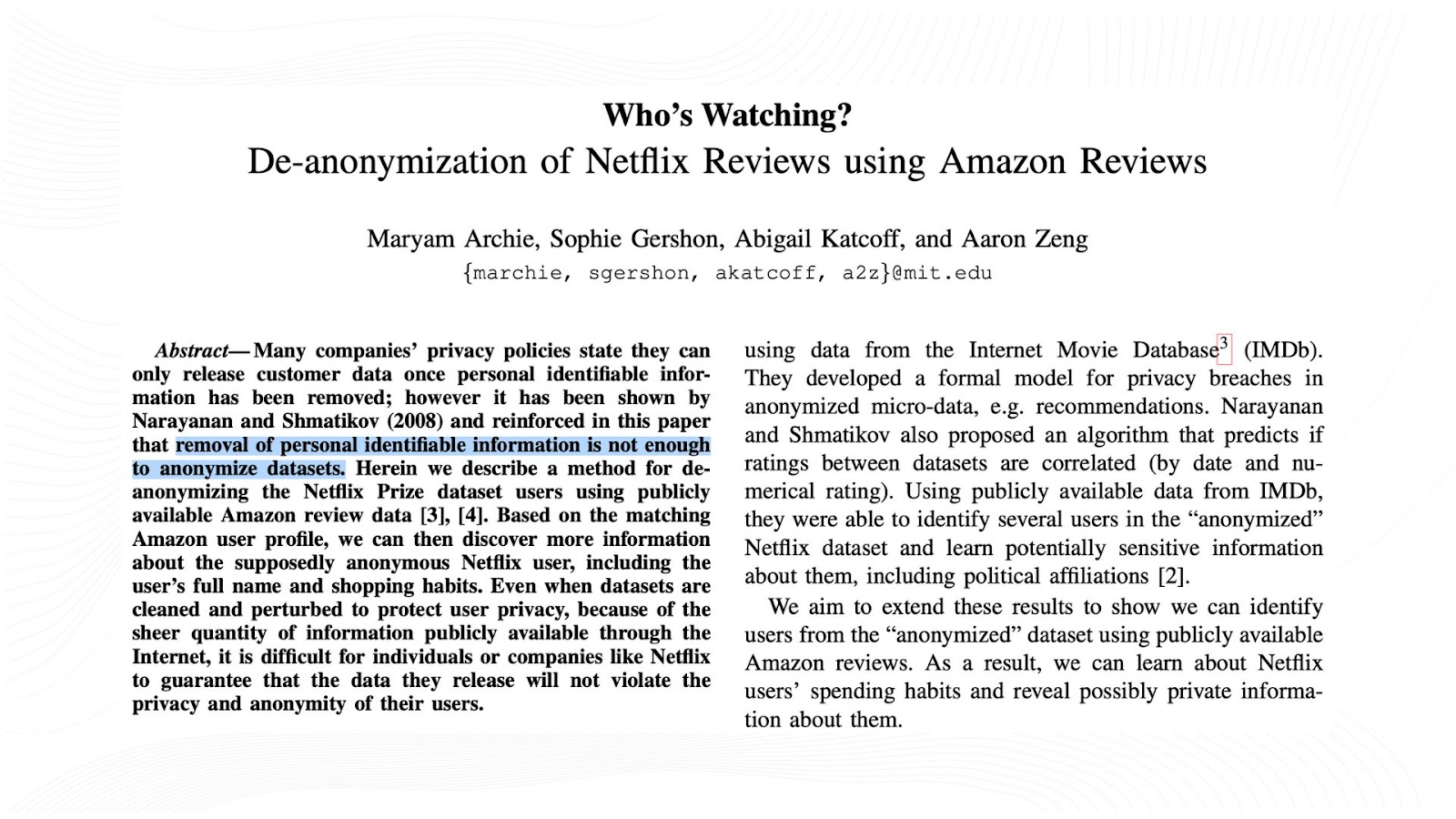

a. Insufficient Protection Against Re-identification:

Legacy techniques often fail to protect against re-identification attacks. Indirect identifiers, like gender, date of birth, and zip code, can easily be combined to re-identify individuals, even when direct identifiers are removed. This vulnerability undermines data privacy and data security in anonymized datasets.

b. Loss of Data Utility:

Techniques such as generalization and randomization degrade the quality and utility of the data. Generalization reduces data granularity, making it less useful for detailed analysis, while randomization introduces noise that can obscure important patterns and correlations within the data. This loss of data utility is particularly problematic for machine learning (ML) models and advanced analytics, which require high-quality, detailed data to function effectively.

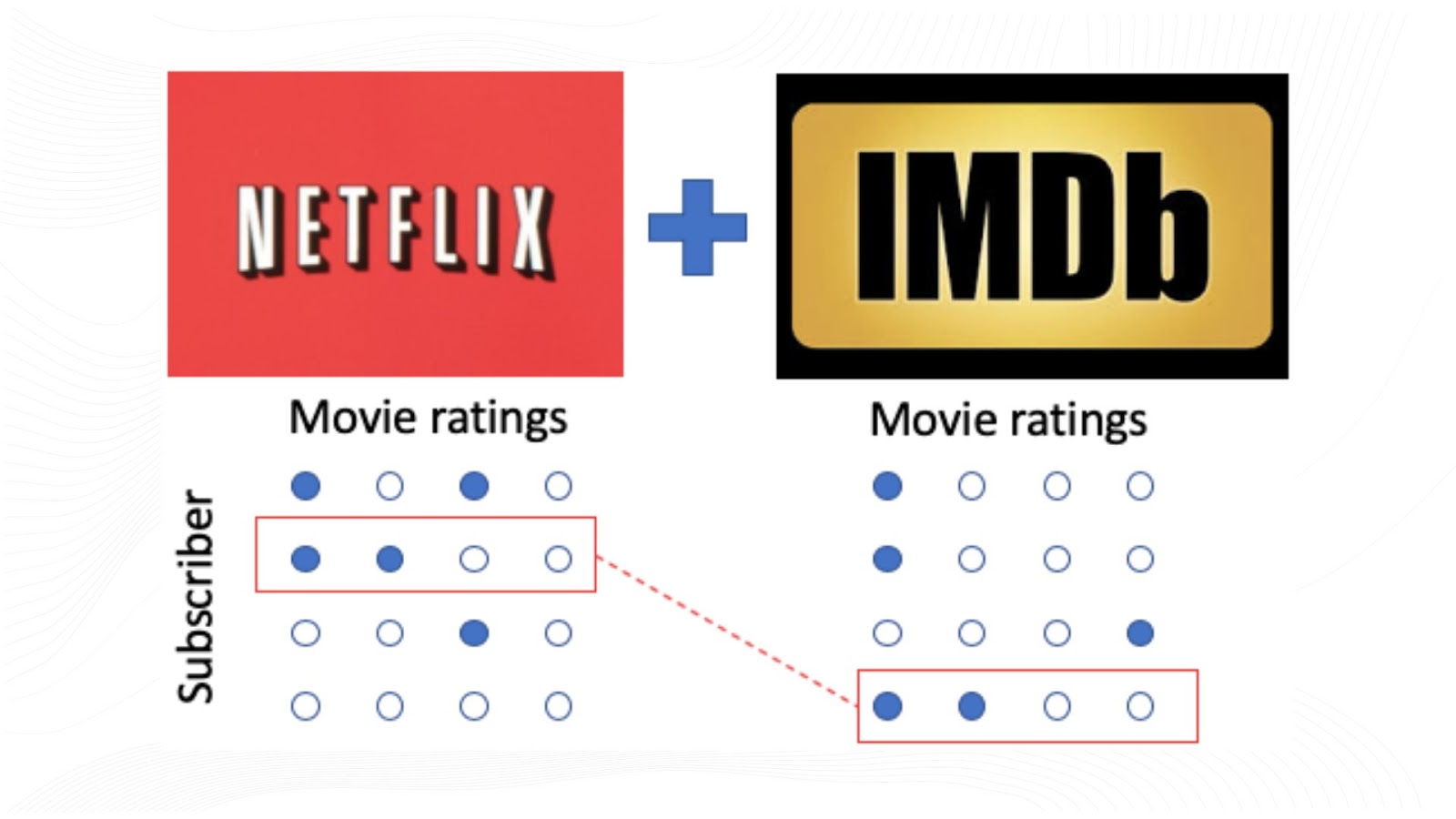

c. Emergence of Linkage Attacks:

Linkage attacks, where anonymized datasets are combined with other datasets to re-identify individuals, are becoming more common. This exploits the presence of quasi-identifiers in multiple datasets, making it possible to match anonymized records with identifiable information from other sources. Such attacks pose a significant threat to data privacy and data security.

d. Static Anonymization Techniques:

Many legacy anonymization methods are static, meaning they do not adapt to new data or changing contexts. This static nature makes them susceptible to evolving privacy threats and more sophisticated re-identification techniques. As data environments and privacy threats evolve, static methods become increasingly inadequate.

e. Insufficient Protection for High-dimensional Data:

High-dimensional datasets, which contain numerous attributes, are particularly challenging to anonymize effectively. Techniques like k-anonymity, l-diversity, and t-closeness often fall short in protecting against re-identification in such datasets because they struggle to balance privacy and data utility in complex data environments. This shortfall impacts the usability of anonymized data in machine learning and data analytics.

f. Over-reliance on Pseudonymization:

Pseudonymization is often mistaken for anonymization. However, pseudonymized data can still be linked back to individuals if the pseudonyms are uncovered or if the data is combined with other datasets. This method does not provide true anonymization and fails to meet stringent data privacy and data security requirements.

g. Inadequate Handling of Correlated Data:

Anonymization techniques often fail to adequately address the issue of correlated data. When attributes within a dataset are correlated, simply anonymizing individual attributes may not prevent re-identification. Attackers can exploit these correlations to infer sensitive information, compromising data privacy and security.

h. Challenges with Dynamic Data:

Dynamic datasets that are continually updated pose a significant challenge for traditional anonymization methods. These methods typically do not account for changes over time, making it difficult to maintain privacy protections as new data is added. This is critical for maintaining data security and the reliability of anonymized data.

i. Incompatibility with Advanced Analytical Needs:

Modern data analytics, machine learning, and AI applications require high-quality, detailed data. Legacy anonymization techniques often strip away too much detail, rendering the data useless for these advanced applications. This incompatibility limits the practical use of anonymized data in modern data-driven environments, affecting the performance of ML models.

j. Emergence of Advanced Privacy Threats:

As data privacy concerns grow, so do the methods to breach anonymized datasets. Techniques like linkage attacks, where anonymized data is cross-referenced with other data sources to re-identify individuals, are becoming more common. This makes legacy anonymization methods increasingly vulnerable, necessitating more robust solutions that can withstand sophisticated privacy attacks and ensure data security.

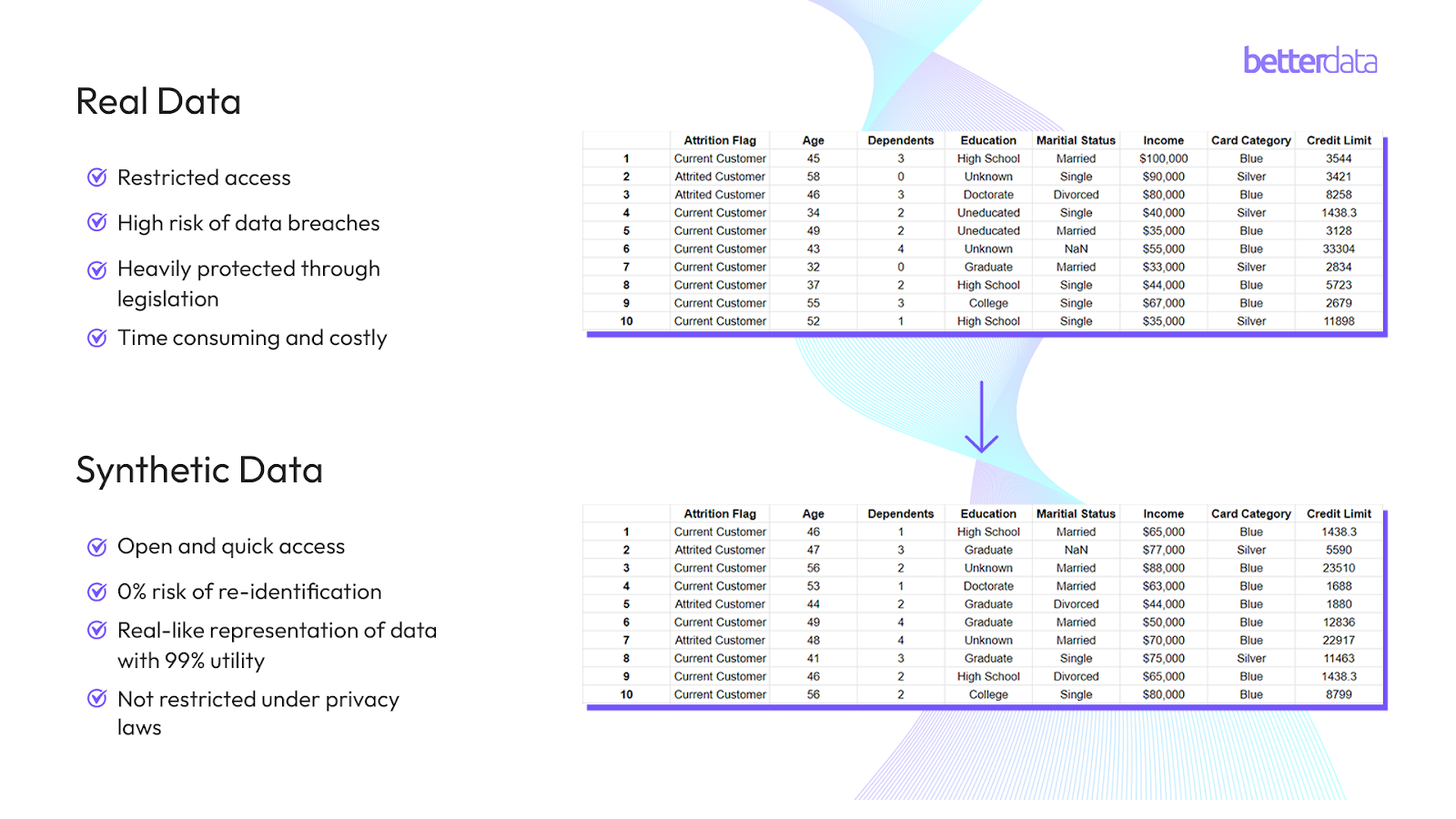

5. An Advanced Solution for an Advanced World: Synthetic Data

Synthetic data is artificially generated data that replicates the statistical properties and structure of real-world data without containing any actual personal information. This approach ensures privacy while maintaining high data utility, making it suitable for various applications, including machine learning, AI, and advanced analytics. Unlike traditional anonymization techniques, synthetic data provides a more robust and scale-able solution to data privacy challenges while maintaining data utility.

Read more about the advantages of using synthetic data here.

6. Why you should be using Synthetic Data

a. Enhanced Privacy Protection:

Synthetic data eliminates the risk of re-identification since it does not contain any real personal information. This provides a robust layer of privacy protection compared to traditional anonymization methods, which can often be reverse-engineered. By generating data that mimics the behavior and characteristics of real data without replicating actual individuals, synthetic data ensures that privacy is maintained without compromising on data quality. This is particularly crucial in sectors like healthcare, finance, and telecommunications, where privacy is paramount.

b. High Data Utility:

One of the key benefits of synthetic data is its ability to preserve the statistical properties and structure of real-world data. This high level of fidelity makes synthetic data extremely valuable for training machine learning models and conducting detailed data analysis. For instance, synthetic data can be used to train predictive models in a way that closely resembles training with real data, thus ensuring that the insights derived from the models are both accurate and reliable. This is essential for applications that require high-quality, granular data, such as fraud detection, customer segmentation, and predictive maintenance.

c. Scalability:

Synthetic data can be generated at scale, making it ideal for large datasets required in big data and AI applications. Unlike real-world data, which may be limited in availability and scope, synthetic data can be produced in virtually unlimited quantities. This scalability is particularly beneficial for training deep learning models, which require vast amounts of data to achieve high performance. Moreover, synthetic data can be generated to reflect a wide range of scenarios and conditions, enabling comprehensive testing and validation of models.

d. Compliance with Privacy Regulations:

Synthetic data inherently complies with privacy regulations like PDPA, GDPR, and CCPA, as it does not involve real personal information. This reduces the legal and compliance risks associated with data usage. Organizations can use synthetic data to develop and test new products, conduct research, and share data with third parties without the need for extensive legal reviews or the risk of privacy breaches. This compliance aspect is critical for industries that are heavily regulated and need to ensure that their data practices meet stringent privacy standards.

e. Versatility:

Synthetic data can be tailored to specific use cases, ensuring that the generated data meets the needs of various analytical and operational requirements. It is adaptable and can be used across multiple domains, from healthcare to finance. For example, in healthcare, synthetic data can be used to simulate patient records for training and testing electronic health record (EHR) systems, while in finance, it can be used to model financial transactions for fraud detection algorithms. This versatility makes synthetic data a powerful tool for a wide range of applications, enabling organizations to innovate and experiment without the constraints imposed by real-world data limitations.

7. Why is Synthetic Data Better than Legacy Data Anonymization?

While legacy data anonymization techniques have their place, they are increasingly inadequate in protecting privacy and maintaining data utility. Techniques like k-anonymity, l-diversity, and t-closeness often struggle to balance privacy and utility, especially with high-dimensional data and dynamic datasets. Synthetic data offers a viable and advanced alternative, ensuring that organizations can leverage their data securely and effectively.

By addressing the limitations of traditional anonymization methods, synthetic data provides enhanced privacy protection, maintains high data utility for training ML models and conducting advanced analytics, and offers scalability and compliance with privacy regulations. This makes synthetic data an indispensable asset for modern data-driven organizations seeking to balance the demands of data privacy, data security, and analytical rigor.