1. What is Synthetic Data:

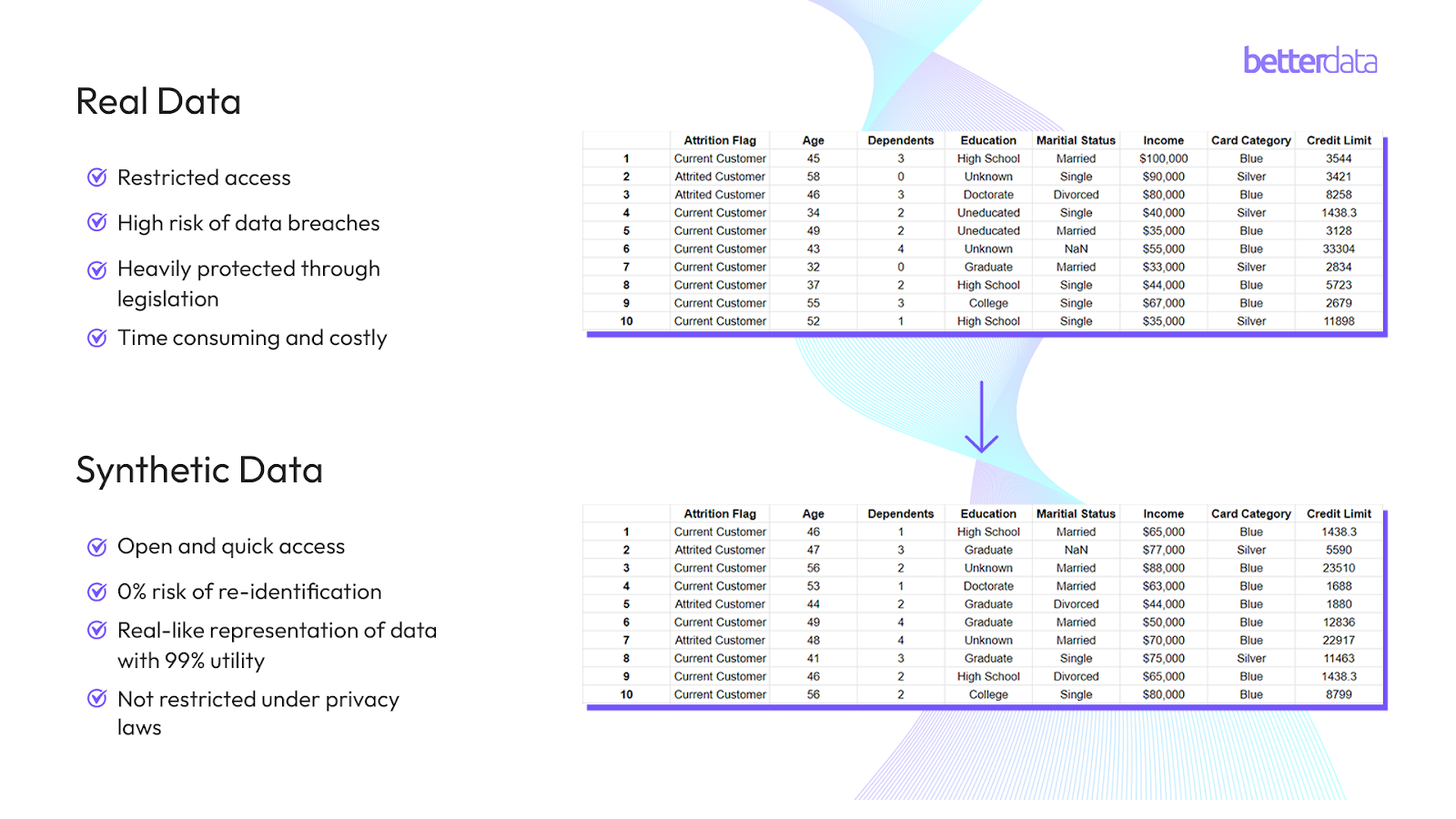

Synthetic data is a subset of Generative AI (GenAI) used as a substitute for real data in training machine learning (ML) models. It is trained on real data to create untraceable datasets that mimic the statistical properties of real-world data without privacy leakage implications, as synthetic data does not contain information about real individuals. This allows data consumers to access high-quality, privacy-preserving data faster and more safely.

2. Importance of Synthetic Data for ML Models:

ML models require massive amounts of data to operate, but data is hard to obtain, and good quality data takes time to organize and clean. Real-world data is protected by data-protection regulations such as the Personal Data Protection Act (PDPA) in Singapore and the General Data Protection Regulation (GDPR) in the EU, which impose massive fines for breaches of data privacy law(s). One of the key challenges with real data is that it contains human biases, is generally imbalanced, and is therefore prone to data drift over time.

An analysis of more than 5,000 images created with Stable Diffusion found that it took racial and gender disparities to extremes—worse than those found in the real world. - Bloomberg

While all of these are valid problems, bias in data can render ML models completely useless. In this article, we will discuss what bias is and how synthetic data can help remove bias in ML.

3. Types of Data Bias:

a. Undersampling:

Undersampling occurs when certain classes or groups within the dataset are underrepresented. This can lead to models that perform poorly on these minority classes because they have not been adequately learned during training. Undersampling can happen due to various reasons, such as data collection methods, availability of data, or even intentional/unintentional neglect. Models trained on undersampled data can exhibit poor generalization to underrepresented classes, leading to biased predictions. Suppose a bank has a dataset where 99% of the transactions are legitimate (majority class) and 1% are fraudulent (minority class). To balance the dataset, the bank decides to undersample the legitimate transactions. If the bank randomly removes a large portion of the legitimate transactions, it might inadvertently exclude many types of legitimate behavior patterns crucial for distinguishing between fraudulent and non-fraudulent transactions. As a result, the trained model might fail to recognize legitimate variations in spending behavior, leading to higher false positive rates where legitimate transactions are incorrectly flagged as fraud.

b. Labeling Errors:

Labeling errors refer to instances where the data has been incorrectly labeled, which can introduce noise into the dataset and adversely affect model performance. Labeling errors can arise from human mistakes, automated labeling processes, or ambiguous data. Models trained on mislabeled data can learn incorrect patterns, leading to reduced accuracy and reliability. For example, consider a medical image classification task aimed at detecting cancerous tumors in radiology scans. If a significant number of images are incorrectly labeled due to human error—such as mislabeling healthy scans as having tumors and vice versa—the model will learn incorrect associations. This labeling error bias can result in a model that misclassifies healthy patients as having cancer (false positives) or, more critically, misses cancerous tumors (false negatives).

c. User-Generated Bias:

User-generated bias occurs when data analysts or engineers unintentionally introduce bias during data processing and model training. This type of bias can stem from various sources, including selection bias, confirmation bias, and overfitting. User-generated bias can lead to models that reflect the biases of the analysts rather than the true patterns in the data, resulting in unfair or inaccurate predictions, such as a hiring algorithm that unfairly favors certain demographic groups. For example, consider a movie recommendation system that relies on user ratings and reviews to suggest films. If a certain demographic, such as young adults, is more active in providing ratings and reviews, the recommendation model may become biased toward the preferences of this demographic. This user-generated bias can result in the system predominantly recommending movies that appeal to young adults while underrepresenting the preferences of other demographic groups.

d. Skewed or Underrepresented Samples:

Skewed samples occur when the dataset is disproportionately represented in certain features, leading to biased models. A training data set may be skewed or underrepresented, where an underrepresented group has less training data, resulting in poorer performance on those groups. Skewed sample bias can significantly impact the performance and fairness of ML models, particularly in predictive policing. For instance, consider a predictive policing system that uses historical crime data to forecast future crime hotspots and allocate police resources. If historical data is skewed towards certain neighborhoods due to over-policing, the training dataset will reflect this bias, showing higher crime rates in these areas. Consequently, the model will learn to disproportionately predict higher crime rates in these neighborhoods, leading to a self-fulfilling prophecy where more police resources are allocated to over-policed neighborhoods, exacerbating existing inequalities.

e. Limited Features in Training Sets:

Limited features refer to insufficient or incomplete data attributes used to train ML models. Historical data collection practices often recorded only a subset of potentially relevant attributes. The consequences of having limited features are significant and can lead to biased and suboptimal ML models. Such models miss opportunities, failing to leverage all relevant information to make informed decisions, which undermines trust in the fairness and reliability of automated decision-making systems, particularly in high-stakes applications like finance, healthcare, and employment.

4. Impact of Bias on Artificial Intelligence (AI):

The impact of bias on AI is profound and multifaceted, affecting the accuracy, fairness, and societal trust in AI systems. Bias in AI can arise from skewed training data, biased algorithms, or prejudiced design decisions, leading to models that systematically favor certain groups over others. In 2023, the US Equal Employment Opportunity Commission (EEOC) settled a suit against iTutor for $365,000. iTutor was accused of using AI-powered recruiting systems that automatically rejected female applicants aged 55 or older and male applicants aged 60 or older. This bias can manifest in various ways, such as discriminatory hiring practices, biased credit scoring, and unfair judicial outcomes, where AI systems perpetuate and exacerbate social inequalities. Technically, biased models can exhibit reduced generalization capabilities, performing well on overrepresented groups while failing on underrepresented ones, which compromises the robustness and reliability of AI applications. Furthermore, biased AI systems can erode public trust, as users become wary of automated decisions perceived as unfair or discriminatory. A 2019 paper showed that black patients received lower health emergency risk scores than people with lighter skin tones. Addressing bias in AI is crucial for ensuring ethical, equitable, and effective AI deployment, necessitating diverse and representative training data, bias detection and mitigation techniques, and continuous monitoring and evaluation.

5. How Synthetic Data Helps:

Synthetic data can play a crucial role in reducing AI bias by providing balanced and representative datasets that mitigate the limitations of real-world data. Synthetic data is artificially generated rather than collected from real-world events, allowing for the creation of datasets that include diverse and equitable representations of various demographic groups. This helps address issues like underrepresentation and limited features that often lead to biased AI models. By supplementing or replacing biased real-world data, synthetic data ensures that machine learning models are trained on a more comprehensive and unbiased dataset. Synthetic data generation techniques, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), can simulate realistic and varied data points that reflect the full spectrum of potential scenarios and populations. This enhances the generalization capabilities of AI models, ensuring they perform well across different groups and conditions. Synthetic data can also be used to test and validate AI systems, identifying and correcting biases before deployment. By integrating synthetic data into the training process, developers can create fairer, more robust, and trustworthy AI systems that better serve diverse populations.

6. BetterData’s Bias-Correcting Synthetic Data System:

Many synthetic data companies have tried to address bias by increasing the number of samples of underrepresented groups. However, Betterdata’s ML team findings resonate with those in the ICML 2023 paper, which proved that simply adding synthetic data without considering the downstream ML model will not improve performance. Instead, Betterdata’s programmable synthetic data platform directly improves underrepresented classes of the downstream ML task by targeting incorrectly predicted samples and creating synthetic data to improve performance. Through this, Betterdata’s technology can consistently improve precision rates by up to 5%, improving positive predictions.

.jpg)