Synthetic data is most commonly known for its ability to anonymize production data into privacy-preserving alternative data through Generative Adversarial Networks (GANs), Large Language Models (LLMs), etc. making it almost impossible for anyone to collect relevant personal information identifiers (PII). However synthetic data, due to its many abilities in refining, enhancing, and balancing real data, can be used for several other purposes as well, especially for model training (ML). Data has become the cornerstone of all commercial and non-commercial ventures. Whether a financial institution wants to analyze the adoption rate of a new credit system or a public hospital wants to find out the rate of success of a new medicine, data tells all. But not without intense scrutiny, high collection, storage, and processing costs, and the looming threat of a data breach accompanied by a heavy fine. Synthetic data, though subject to some skepticism, has been hailed by many in the data sciences field as a viable and sustainable solution to the ‘Big Data Problem.’

1. Basics of Synthetic Data:

We have compiled a detailed guide to understanding synthetic data which you can read here. However, for the sake of simplicity, we will summarize the what and why of synthetic data here.

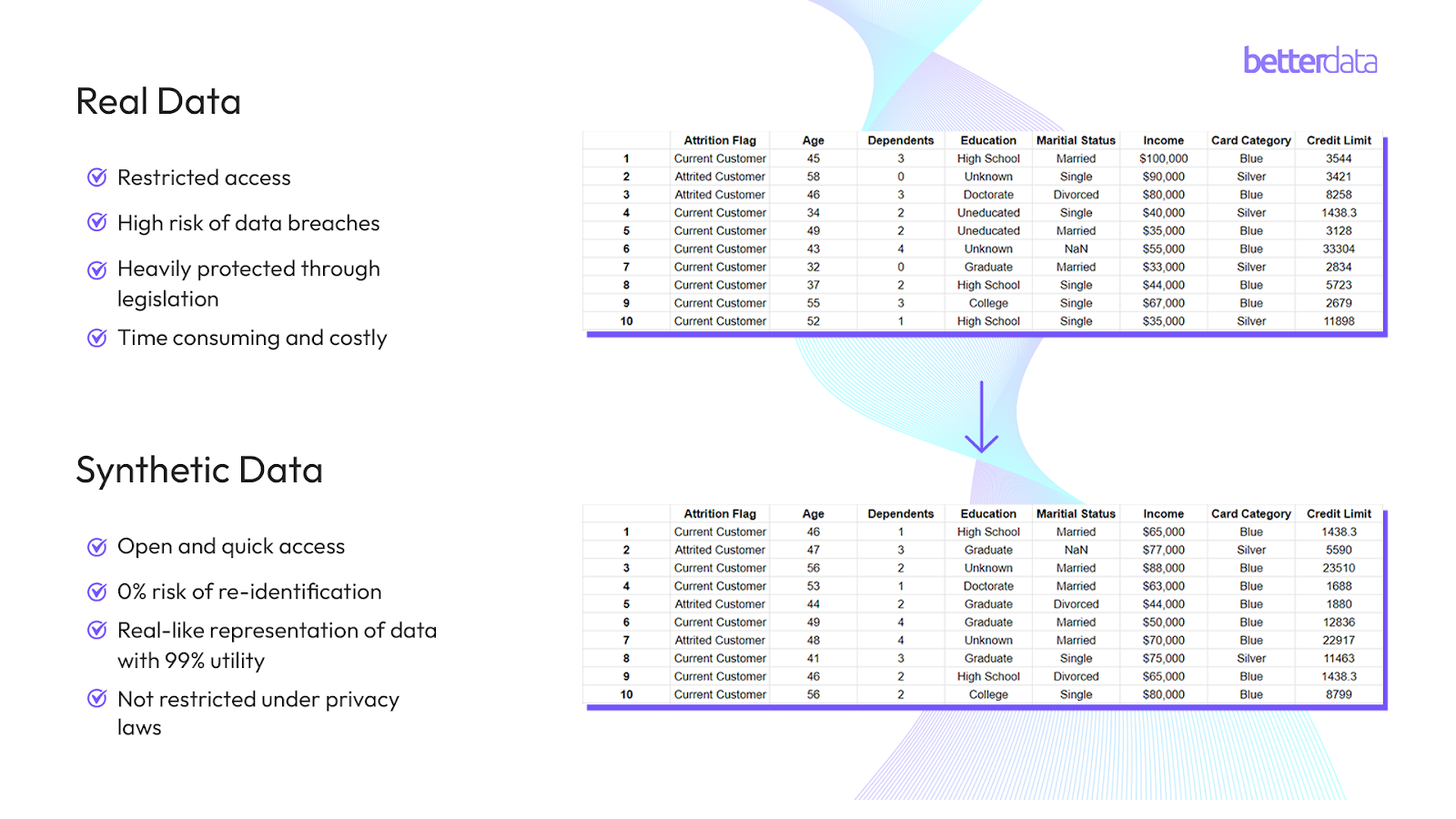

Synthetic data is artificially generated data that looks, feels, and functions similarly to real data. It is generated using generative AI models created through deep learning techniques that analyze the statistical properties of real data using Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), or Transformers to generate identical datasets with no personally identifiable information (PII).

Contrary to popular belief, synthetic data is not anonymized data (at least in the traditional sense). While synthetic data does, in theory, anonymize data, it does not encrypt, destroy, or mask real data features, preserving data utility. This allows data-driven enterprises to use and share data freely and safely while maintaining data utility and consumer privacy.

2. Synthetic Data for Predictive Modelling:

The biggest challenges in predictive modeling are:

- Lack of non-regular or rare user behavior

- Inadequate quantities of real data unless you buy heavily anonymized data at a high price

- Data imbalances and human biases

Let’s take a crude example: we are a health tech business based in Singapore launching a vertical in the European market. Our data would mostly be centered around Asia since that is our primary market. In addition, multiple variables are at play that have to be analyzed separately and in correlation to each other. The challenges mentioned above make it significantly harder for us to come to any logical conclusion; at best, we would have educated guesses. This is why synthetic data is important. It can narrow down our margin of error from (assumed numbers in this case) let’s say 12% to 6% on a 100 million dollar deal; we not only end up saving 6 million dollars but are also well-prepared to counter rare or unseen challenges.

3. Benefits of Synthetic Data for Predictive Modelling:

a. Data Privacy Concerns:

In industries like healthcare and finance, strict regulations often limit access to sensitive data. Synthetic data provides a workaround by allowing organizations to use data that mirrors real-world information without compromising privacy.

b. Data Scarcity:

In many cases, obtaining enough data to train an accurate predictive model is difficult. Synthetic data can be generated in large volumes, ensuring that models have enough examples to learn from.

c. Imbalanced Datasets:

Real-world datasets often suffer from imbalances, where certain outcomes or categories are underrepresented. Synthetic data can be used to create balanced datasets, improving the model's ability to generalize across different scenarios.

d. Testing and Validation:

Synthetic data allows for rigorous testing and validation of predictive models under various conditions. This helps in assessing the model's performance before deploying it in real-world situations.

e. Enhanced Model Accuracy:

With synthetic data, predictive models can be trained on a broader range of scenarios, including rare or extreme events that might not be present in real-world data. This leads to more accurate and reliable predictions.

f. Speed and Scalability:

Generating synthetic data is often faster and more scalable than collecting real data. This enables quicker iterations in model development, accelerating the overall process.

g. Regulatory Compliance:

By using synthetic data, organizations can navigate strict regulatory environments more easily. Since synthetic data is not tied to real individuals, it mitigates privacy risks, making it easier to comply with laws like GDPR.

h. Cost Efficiency:

Collecting and curating large datasets can be expensive and time-consuming. Synthetic data reduces these costs, allowing organizations to focus resources on refining their predictive models.

4. Models for Predictive Modelling with Synthetic Data:

a. Regression:

Regression models predict continuous numerical values based on input variables by identifying relationships between inputs and outputs. Common algorithms include linear, polynomial, and logistic regression.

b. Neural Network:

Neural networks mimic the human brain to learn complex input-output relationships for tasks like image recognition and natural language processing. Algorithms include MLP, CNN, RNN, LSTM, and GANs.

c. Classification:

Classification models categorize data into predefined groups using relationships between input variables. Algorithms include decision trees, random forests, SVM, KNN, and Naive Bayes.

d. Clustering:

Clustering models group similar data points based on input variable similarities to identify patterns. Popular algorithms include K-means, hierarchical clustering, and density-based clustering.

e. Time Series:

Time series models analyze and forecast data over time by identifying trends and patterns. Common algorithms include ARIMA, exponential smoothing, and seasonal decomposition.

f. Decision Tree:

Decision tree models use a tree structure to predict outcomes based on input variables. Algorithms include CART, CHAID, ID3, and C4.5 for both classification and regression tasks.

g. Ensemble:

Ensemble models combine multiple models to improve prediction accuracy and stability. Key algorithms include bagging, boosting, stacking, and random forest for both classification and regression.

Tropipay Case Study on Improving Prediction Modelling for Fraud Detection:

Tropipay, a leading electronic wallet for remittance payments, used machine learning models to enhance its anti-fraud detection systems. They analyzed a range of transactional and geographic data to identify risky transactions. In a comparison between traditional balancing techniques like undersampling and SMOTE and models trained on synthetic data, the latter showed superior results, with the key metric being Recall, which measures how accurately the models identified actual fraud cases.

Synthetic data models led to a 19% increase in correct fraud identification compared to traditional methods. Moreover, the overall accuracy increased slightly despite the Accuracy Paradox. Synthetic data significantly reduced false positives and produced more robust models that better mimic real-world patterns, offering a more reliable fraud detection approach than older techniques.

Ending Thoughts:

Synthetic data generated from excellent and robust generative AI models has the potential to improve prediction scores, allowing organizations to make correct and accurate assessments as seen in the case of Tropipay, where they improved their fraud detection by 19%. Similarly, healthcare can benefit from synthetic data by simulating patient data for rare diseases, thus improving diagnosis and treatment planning without violating patient confidentiality. Retailers can use synthetic data to forecast demand, optimize inventory, and personalize marketing strategies based on predictive models trained on diverse customer behavior scenarios. And, as already established by Tesla, synthetic data is crucial in training predictive models for autonomous vehicles, where real-world data on rare driving scenarios is limited. One trend you might notice with synthetic data is its applicability in different industries and scenarios. While many credit this to being primarily a ‘synthetic data’ feature, we like to believe that this is the true potential of data without any constraints. And while it is a fallacy to think that we will ever be able to use real consumer data for Machine Learning (ML), synthetic data is the closest we can get to utilizing the full potential of data in all its transformative and disruptive glory.

.png)